Air-Conditioning the Internet: Data Center Securitization as Atmospheric Media

Monday, April 26, 2021 at 11:23PM

Monday, April 26, 2021 at 11:23PM Jeffrey Moro

[ PDF Version ]

Figure 1. As Equinix strictly forbids all photography, I have to make do in this essay with those images it sanctions. Google Street View presents a compromise, in that it is “permitted” (in the sense that Google never needs to ask permission) yet also an opportunity to see DC11 outside of Equinix’s own marketing materials.

Standing in the dim light, LEDs flickering before me, the occasional clanking duct punctuating the dull roar of whirring fans, I thought it would be colder.[1]

I didn’t know what to expect from my visit to DC11, a “peering point” in Ashburn, Virginia owned by the multinational data center company Equinix. Its servers, which route cloud computing’s traffic and form its very substance, need the cold to counter the massive amounts of heat they generate. Here in DC11’s windowless bowels, it’s an ominously neutral sort of temperature. While the main floor more or less resembles stock photographs of data centers—all rows of black boxes locked behind chain link—the rest of DC11 could pass for any anonymous office building in the Washington, DC metro area (see Figure 1). Our tour guide, an affable man I’ll call “Mike,” tells us that it’s a testament to the efficiency of DC11’s air-conditioning systems. If we were to go into the aisles themselves—where, Mike reminds us, we were emphatically not allowed—we would feel the intensity of alternating rows of hot and cold air, the former pulled up into ventilation shafts and the latter piped down to the servers. Mike leads us to a clearing at the end of an aisle where we can stand beneath an open duct. Frigid air blasts down.I ask Mike what would happen to DC11 if its air-conditioning systems shut down. He said that would never happen because DC11 has a generator and the raw materials for twenty-five minutes of uptime, plus a priority link to the county’s electrical grid. “But if catastrophe did strike,” he says, “it’d be about twenty minutes to total heat death.” Air-conditioning keeps the internet alive.

This essay is about the total heat death that awaits all data and the infrastructural technologies data centers employ to delay it, although they can never eliminate it entirely. It is also about how the data center industry, locked in the anxious present of climate catastrophe, responds to the inevitability of data’s death with an unprecedented desire for security. Equinix and its DC11 data center form case studies through which I argue that those technologies we call “air-conditioning” and those we call “the internet” are, in fact, one and the same. Their interdependence bears consequences for how media studies scholars approach cloud computing’s environmental and cultural impacts. From an environmental perspective, as scholars such as Mél Hogan[2] and Nicole Starosielski[3] have noted, the internet cannot function without enormous expenditures of energy for cooling and humidity control. As such, DC11’s life cycles rely not just on the management of data but also that of air, which builds and disperses heat. From a cultural perspective, Equinix uses air as a medium of securitization: the techniques with which it keeps its servers cool map fluidly onto those with which it keeps them secure. Its data centers become modern climate bunkers, as impervious to rising atmospheric carbon as enterprising hackers. I conclude by suggesting that this confluence of air-conditioning and the internet, which I term “atmospheric media,” illuminates how inhuman (and inhumane) the internet’s life cycles have become in our climatological moment.

Figure 2. An aerial satellite view of Ashburn, Virginia.

Where better to build a bunker than Ashburn? Here, thirty miles from DC, the United States’ urban capital frays into rural fragments. Tung-Hui Hu writes that data centers’ “insatiable demand for water and power explains why [many] are built” at a remove from urban centers.[4] Much of that energy drives cooling,[5] the requirements for which are so substantial that Equinix and its peers are now even exploring ways to suborn the climate as a passive HVAC system, placing data centers in the Arctic or at the bottom of the ocean.[6] Geographically, Ashburn has no such climatological affordances. If anything, it militates against the cool, as average temperatures in the DC region have increased substantially over the past fifty years.[7] But Ashburn has long been central to the internet’s infrastructure, a jewel in what Paul Ceruzzi terms the “Internet Alley,” the geographical locations from which “the internet is managed and governed.”[8] Of the fifteen data centers Equinix runs in the DC region, ten are in Ashburn.[9] What the town lacks in population (43,411—on par with the enrollment at my university), it more than makes up for in data centers. Ashburn has more data centers concentrated in it than anywhere else on Earth: 13.5 million square feet, with another 4.5 million in development as of 2019.[10] At some point, about three-quarters of the world’s internet traffic passes through this relatively small town. The price of such interconnectivity in such a hot place is that DC11 must make do with the finest in air-conditioning technologies in order to stay up and running.

A data center must cultivate formal distinctions between hot and cold, which in turn underpin and direct every aspect of its operations, from its architectural design to how information routes through its servers. This project recalls similar undertakings in media studies, the resonances of which I want to tease out in the service of developing a media theoretical approach to air-conditioning. As Starosielski observes, temperature has long been a concern in the field, from Claude Shannon’s appropriation of the thermodynamic concept of entropy for his 1949 “The Mathematical Theory of Communication” to the thermal imagery of drone warfare.[11] German philosopher Peter Sloterdijk explicitly deploys the phrase “air-conditioning” to articulate the twentieth century’s project of placing the air under human control, which begins in his analysis with World War One’s gas warfare.[12] Our contemporary moment, marked by pandemic-driven temperature checks and annual “once-in-a-century” disasters, has only made these entanglements between media and temperature more explicit.

Perhaps no media scholar is more associated with the concepts of “hot” and “cool” media than Marshall McLuhan.[13] For McLuhan, hot and cool have less to do with literal temperature and more with what he terms a medium’s “definition” and “participation.” A hot medium such as radio is “high definition,” filled in with information, yet does not allow the user to participate beyond tuning the dial. Conversely, a cool medium like a cartoon is “low definition,” sketched in suggestions, and requires the reader to fill in the medium’s gaps. Cloud computing, such as that practiced in DC11, complicates this heuristic because it is simultaneously awash in information yet demands constant participation from its users to contribute more. Yet McLuhan takes care to note that a medium’s “temperature” is never absolute, but rather shifts “in constant interplay with other media.”[14] Here, I’m wary of how I’m pushing McLuhan’s admittedly vague definitions of hot and cool past the metaphorical and toward the thermodynamic. But as Dylan Mulvin and Jonathan Sterne suggest, this might be a latent tendency in McLuhan’s own theory given how his “blend of systems theory and temperature metaphors makes sense: a climate is a calibrated system, and ‘equilibrium’ in that system is all too fragile.”[15] Indicative of McLuhan’s investment in cybernetics, this homeostatic equilibrium influences how contemporary scholars such as Starosielski approach temperature as a medium of standardization. Without the precise calibration of thermal energy, media can all too easily (and quite literally) melt down.

Managing the life cycle of a data center is one of navigating constantly shifting temperature differentials. While there’s no single strategy for ensuring thermal equilibrium, companies have defined best practices over the past few decades as centers have become more common and in demand worldwide. Despite the popular conception of data centers as icy meat lockers, many such as DC11 run closer to room temperature than one might expect, a testament to how sophisticated targeted practices of air-conditioning have become.[16] Just as smaller personal computers use fans to cool their processors, so too do data centers rely primarily on forced-air cooling. The cooling capacity of a typical computer room air conditioner, or CRAC, exceeds a home unit by several orders of magnitude.[17] These units entail high energy costs, even up to 60% of the data center’s total energy load in certain conditions.[18] However, DC11 can make back efficiency through its architecture, a design strategy few domestic and commercial spaces can afford.

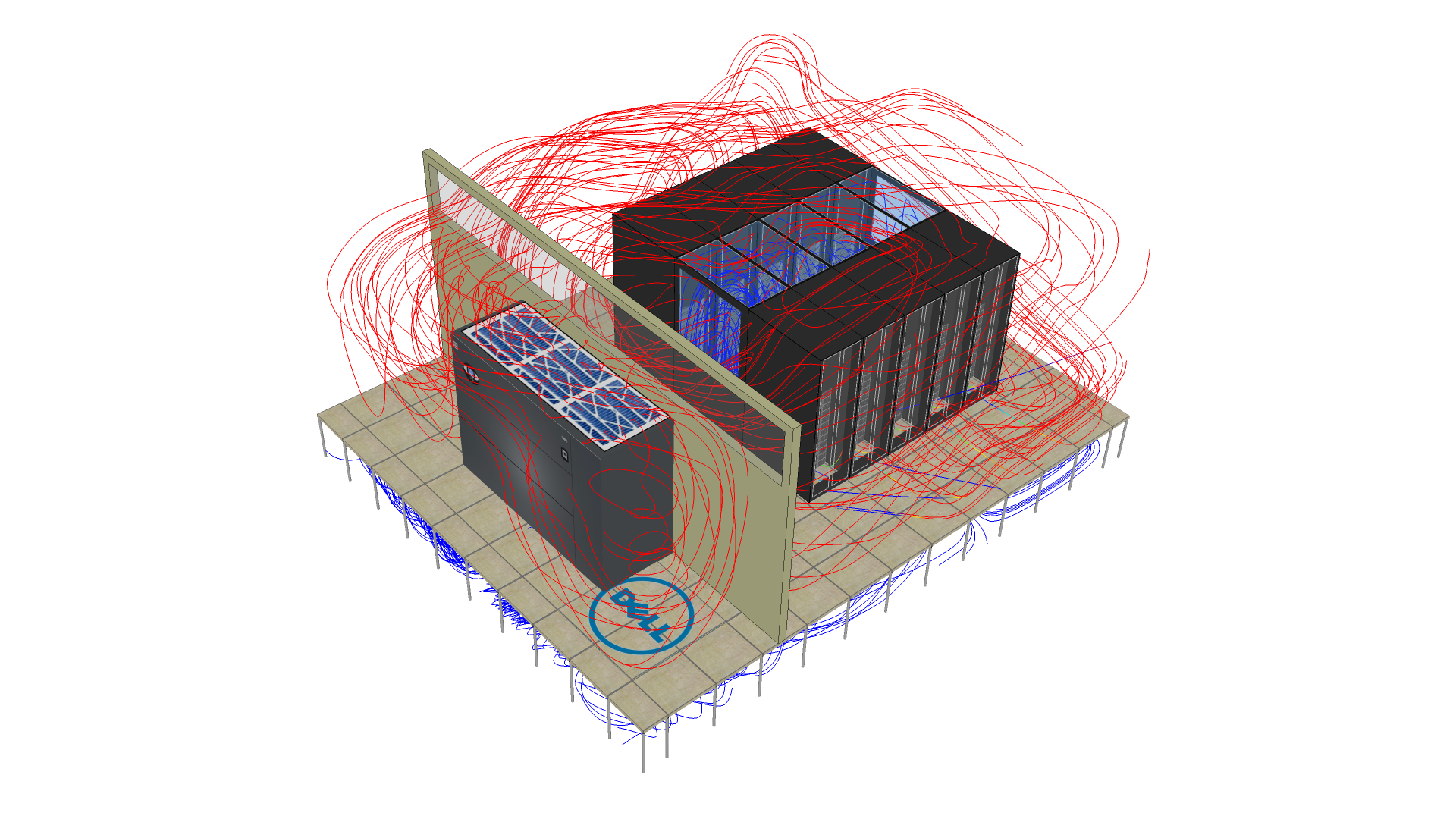

Figure 3. A diagram of cold aisle containment.

One such strategy that DC11 in particular deploys to balance cooling and efficiency is called “cold aisle containment.”[19] In cold aisle containment, data centers position their server racks in long aisles, with the fans on the backs of the rack facing each other to produce alternating aisles of hot and cold air. The data center then blocks off the “cold aisle” with purpose-built doors or hanging sheets, allowing warmer air to proliferate throughout the data center. Cold aisle containment is an unusual choice for DC11. While typically cheaper to implement than other containment strategies, it can also lead to higher energy costs. The trick is in DC11’s coupling of cold aisle containment with open-air ventilation. By releasing hot air, DC11 limits its cooling to only the amount it judges it needs. Cold aisle containment is thus why I didn’t feel the cold when I first entered DC11. The data center keeps its cool air under lock and key, circulating it only where it must.[20]

In theorizing DC11’s air-conditioning, I am drawn to one of McLuhan’s lesser cited concepts in his 1964 Understanding Media: The Extensions of Man, that of the “break boundary.” A break boundary, McLuhan suggests, is a point at which a medium moves so far along its hot/cool axis that it “overheats” (his thermodynamics are messy here) and course-corrects in the opposite direction.[21] Break boundaries are both material and cultural, as McLuhan notes when he describes the industrial developments of the late nineteenth century as “[heating] up the mechanical and dissociative procedures of technical fragmentation.”[22] DC11’s architectural obsession with temperature betrays literal and figurative break boundaries. In the literal sense, DC11 must mitigate against the overheating that would disrupt its systems’ operations. But in the figurative sense, its architecture betrays how the tech industry is negotiating against an oncoming climatic break boundary, as the drive toward cloud computing multiplies the demand for data centers worldwide. Cold aisle containment might mitigate against a break in the near future, but on a global scale, containment always leaks. As Finn Brunton observes, “the Earth’s thermal system . . . is the terminal heat sink,” a technical term for the part of a computer that safely collects thermal energy away from vulnerable components.[23] The life cycle of a data center is one of constant negative feedback, as the targeted application of cool air delays but can never resolve servers’ own tendencies to overheat.

DC11’s atmospheric control extends beyond its servers. The entire building is a masterclass in securitization and control.[24] When I first arrived, I met with my tour group in a lobby, where we exited into a small courtyard. I counted three visible security cameras. We reentered the building through a separate entrance equipped with the first of many biometric scanners. As Mike held his hand to the scanner, he told us that they checked ninety points on the hand plus body temperature. Through that door, we reached another lobby. Guards checked our IDs against a pre-approved list. We received badges that we were instructed to keep visible at all times, and passed through another biometric door into an antechamber, just large enough for the fifteen of us. Mike told us that this room was called a “man-trap,” designed such that one couldn’t open the door unless both the entrance and exit were shut—a precaution against intruders and fire. Another biometric scanner and we were inside the data center proper, although not yet on the floor with the servers. Those were through another scanner. That didn’t mean we could go into the aisles themselves, which Equinix kept behind locked chain-link fencing, past which only the clients had access. Mike told us that there were further levels of security for those willing to pay, from infrared trip wires to armed guards. Despite its role as a waystation on the information superhighway, DC11 is impregnable, a bunker through which only data and air may flow.[25]

This physical security apparatus is primarily aimed at deterring rogue individuals: lone hackers who might enter and compromise the servers. However, Equinix has grown more sensitive in recent years to security threats posed by nonhuman forces. Climate change poses threats to data center integrity, from sea level rise drowning coastal infrastructure to natural disasters such as hurricanes damaging networking equipment.[26] In their 2019 10-K (a formal report that US companies must file annually with the Securities and Exchange Commission), Equinix explicitly notes that climate change poses an existential threat to its operations. It writes that “the frequency and intensity of severe weather events are reportedly increasing locally and regionally as part of broader climate changes . . . [posing] long-term risks of physical impacts to our business.”[27] It notes that pressures to address climate change emerge not only from the environment itself but also from clients and investors, for whom climate change presents a significant point of anxiety. To manage this anxiety, Equinix assumes a quasi-martial stance. In a 2018 annual report on “corporate sustainability” to its investors, Equinix states that it “prioritiz[es] responsible energy usage and high standards of safety, and commit[s] to protecting against external threats such as climate change and data security” (italics mine).[28] Climate change and data security join together under the rubric of “external threats” against which Equinix must predict, plan, and protect.[29]

For Equinix, air-conditioning and security are contiguous projects of sustaining the liveliness of data. Wendy Hui Kyong Chun observes that our contemporary media moment is marked by data’s habitual renewal, devices begging for attention and constant updates, particularly in moments of crisis.[30] While climate change undoubtedly presents numerous crises as landmark events—think natural disasters—DC11’s emphasis on air-conditioning reveals the very nature of computing to be one of constant thermal crisis. Equinix displaces the locus of this data-driven death drive, positioning itself as a vanguard against imagined external threats. At first, DC11’s vision of a bunker-style security runs counter to recent tendencies in security studies which trend toward atmospheric distribution rather than centralization, as noted by Lisa Parks[31] and Peter Adey.[32] However, I would suggest that DC11 represents the physical incarnation of the paradox at the heart of cloud computing, namely that our digital systems are not disaggregated, but rather displaced. Our ubiquitous devices are now little but terminals accessing computers elsewhere. Proliferating the cloud entails centralizing the data center, as servers’ thermal requirements are so specific as to be difficult to further decentralize. Dispersed, immersive, atmospheric security in one location requires centralized, locked-down security in another. The endless recurring thermal crises, the break boundaries which threaten the cloud’s stability, demand ever-more sophisticated techniques of air-conditioning.

DC11 becomes a site of acute thermodynamics, as server heat multiplies server heat. If anything, the true threat comes from within, not without, as unchecked servers would overheat themselves into oblivion. Put bluntly: the tech industry makes our planet hot in the service of keeping its computers cool. This, I suggest, is what makes DC11 a specifically atmospheric media object. DC11’s reliance on and manipulation of air contributes to the cloud’s formal tendencies toward displacement and (re)centralization. Air expedites the transformation of data centers into climate bunkers. Furthermore, the air’s perceived insubstantiability, compared with other subjects of environmental media study, such as rare earth metals or wastewater, makes its pollution that much more challenging to account. Faced with these atmospheric operations, media studies must develop analytical techniques that pierce through the data center’s security veil to reveal how the cloud now programs the atmosphere against itself.

Notes

[1] This essay began as a presentation at the 2019 meeting of the Association for the Study of the Arts of the Present. I thank my co-panelists, Sara J. Grossman, Ben Mendelsohn, and Salma Monani, as well as feedback from our audience, for helping me refine my presentation and for encouraging its publication. I also thank Matthew Kirschenbaum, Skye Landgraf, Purdom Lindblad, and Setsuko Yokoyama for their feedback on earlier drafts. Jason Farman organized the tour of DC11 that sparked this project and many subsequent ones; conversations with him and fellow members of the tour were crucial to its conceptualization. Finally, I thank Miguel Penabella and Amaru Tejada for shepherding this issue to completion, and to my two anonymous reviewers for their incisive and enriching feedback.

[2] Mél Hogan, “Big Data Ecologies,” Ephemera 18, no. 3 (2018): 631–57.

[3] Nicole Starosielski, “Thermocultures of Geological Media,” Cultural Politics 12, no. 3 (November 2016): 293–309.

[4] Tung-Hui Hu, A Prehistory of the Cloud (Cambridge, MA: The MIT Press, 2015), 79.

[5] Fred Pearce, “Energy Hogs: Can World’s Huge Data Centers Be Made More Efficient?” Yale E360, 3 April 2018, e360.yale.edu/features/energy-hogs-can-huge-data-centers-be-made-more-efficient.

[6] For more on Arctic data centers, see Asta Vonderau, “Storing Data, Infrastructuring the Air: Thermocultures of the Cloud,” Culture Machine 18 (2019): culturemachine.net/vol-18-the-nature-of-data-centers/storing-data/; Alix Johnson, “Emplacing Data within Imperial Histories: Imagining Iceland as Data Centers’ ‘Natural Home,’” Culture Machine 18 (2019): culturemachine.net/vol-18-the-nature-of-data-centers/emplacing-data/. For more on undersea data centers, see John Roach, “Under the Sea, Microsoft Tests a Datacenter that’s Quick to Deploy, Could Provide Internet Connectivity for Years,” Microsoft Press Releases, 5 June 2018, news.microsoft.com/features/under-the-sea-microsoft-tests-a-datacenter-thats-quick-to-deploy-could-provide-internet-connectivity-for-years/.

[7] Ian Livingston and Jason Samenow, “Data Show Marked Increase in DC Summer Temperatures over Past 50 Years,” The Washington Post, 26 August 2016, www.washingtonpost.com/news/capital-weather-gang/wp/2016/08/26/data-show-marked-and-consequential-increase-in-d-c-summer-temperatures-over-last-50-years/.

[8] Paul E. Ceruzzi, Internet Alley: High Technology in Tysons Corner, 1945–2005 (Cambridge, MA: The MIT Press, 2008), 135.

[9] Equinix, “Equinix International Business Exchange Sustainability Quick Reference Guide,” Equinix, August 2019, sustainability.equinix.com/wp-content/uploads/2020/05/GU_IBX-Sustainability-Quick-Reference_US-EN-3.pdf, accessed 22 March 2021.

[10] Loudon County, “Data Centers,” Loudoun County Economic Development, VA, 2019, biz.loudoun.gov/key-business-sectors/data-centers/. Accessed 22 March 2021.

[11] Starosielski, “Thermocultures of Geological Media,” 294. A special section of the International Journal of Communication no. 8 (2014) on “Media, Hot and Cold,” edited by Dylan Mulvin and Jonathan Sterne, is an excellent collection of work on media and temperature. In addition to contributions by Starosielski, I also draw from: Wolfgang Ernst, “Fourier(’s) Analysis: ‘Sonic’ Heat Conduction and Its Cold Calculation,” International Journal of Communication 8 (2014): 2535–39; Lisa Parks, “Drones, Infrared Imagery, and Body Heat,” International Journal of Communication 8 (2014): 2518–21.

[12] Peter Sloterdijk, Terror from the Air, trans. Amy Patton and Steve Corcoran (Cambridge, MA: Semiotext(e), 2009), 10.

[13] Marshall McLuhan, Understanding Media: The Extensions of Man, 1st MIT Press ed. (Cambridge, MA: The MIT Press, 1994), 22–32.

[14] Ibid., 26.

[15] Dylan Mulvin and Jonathan Sterne, “Media, Hot and Cold Introduction: Temperature Is a Media Problem,” International Journal of Communication 8 (2014): 2497.

[16] The shift also comes in no small part due to an influential 2011 white paper by the American Society of Heating, Refrigerating, and Air-Conditioning Engineers (ASHRAE), which recommends that data centers be kept between 65°F/18°C and 81°F/27°C and at 60% relative humidity, counter to long-standing industry assumptions about the need for much cooler temperatures. The story of air-conditioning the internet is also one of shifting industrial standards. See: ASHRAE Technical Committee (TC) 9.9, “Data Center Power Equipment Thermal Guidelines and Best Practices,” ASHRAE, 2016, tc0909.ashraetcs.org/documents/ASHRAE_TC0909_Power_White_Paper_22_June_2016_REVISED.pdf, accessed 22 March 2021.

[17] John Niemann, Kevin Brown, and Victor Avelar, “Impact of Hot and Cold Aisle Containment on Data Center Temperature and Efficiency,” APC White Paper 135, revision 2 (Schneider Electric, 2011), facilitiesnet.com/whitepapers/pdfs/APC_011112.pdf, accessed 22 March 2021.

[18] Jiacheng Ni and Xuelian Bai, “A Review of Air Conditioning Energy Performance in Data Centers,” Renewable and Sustainable Energy Reviews 67 (January 2017): 625.

[19] Phil Schwartzmann, “Everything You Need to Know about DC11: Q&A with Data Center Guru Jim Farmer,” Equinix, May 2013, blog.equinix.com/blog/2013/05/10/everything-you-need-to-know-about-dc11-qa-with-data-center-guru-jim-farmer/.

[20] My colleague Kyle Bickoff first encouraged me to investigate Equinix’s containment strategies. His work on media and containment has been influential to my thinking in this regard. See: Kyle Bickoff, “Digital Containerization: A History of Information Storage Containers for Programmable Media,” (paper presented at MITH Digital Dialogue, Maryland Institute for Technology in the Humanities, University of Maryland, 16 April 2019), mith.umd.edu/dialogues/dd-spring-2019-kyle-bickoff/.

[21] McLuhan, Understanding Media, 38.

[22] Ibid., 39.

[23] Finn Brunton, “Heat Exchanges,” in The MoneyLab Reader: An Intervention in Digital Economy, ed. Geert Lovink, Nathan Tkacz, and Patricia de Vries (Amsterdam, NL: Institute of Network Cultures, 2015), 168.

[24] Equinix takes security so seriously that it has even patented its security system. Its primary contribution, it suggests in its patent, is how its multi-level security system provides unprecedented access to people’s locations within its data centers, effectively rendering people as data points. See: Albert M. Avery IV, Jay Steven Adelson, and Derrald Curtis Vogt, “Multi-ringed internet co-location facility security system and method,” US Patent 6971029, filed 29 June 2001, issued 29 November 2005.

[25] I was struck by how much my tour of DC11 resembled earlier tours of Equinix data centers that Andrew Blum describes in his 2012 book Tubes: A Journey to the Center of the Internet. Indeed, the tour that Mike gave us was specifically modeled after those he gives for potential clients. Compared with data centers’ reputation as secure locations, even as reflected in DC11’s architecture, Equinix was remarkably forthcoming and open about its design practices. Though separated by nearly a decade, perhaps the common ground shared by Blum’s and my experiences are a testament to the role of marketing in the data center industry. See: Andrew Blum, Tubes: A Journey to the Center of the Internet (New York: HarperCollins, 2012), 94.

[26] Ramakrishnan Durairajan, Carol Barford, and Paul Barford, “Lights Out: Climate Change Risk to Internet Infrastructure,” in Proceedings of the Applied Networking Research Workshop (Montreal: ACM, 2018), 9–15, dl.acm.org/doi/10.1145/3232755.3232775.

[27] Equinix, “SEC Form 10-K,” Q4 2019, investor.equinix.com/static-files/a1a5ed07-df14-42ed-92a2-6bfed1d1470d, accessed 22 March 2021.

[28] Equinix, “Corporate Sustainability Report: Connecting with Purpose,” 2019, sustainability.equinix.com/wp-content/uploads/2019/12/Sustainability-Report-2018.pdf, 4, accessed 22 March 2021.

[29] In this stance, Equinix is joined by the United States military which has repeatedly cited climate change as a potential threat to national security, particularly under the Obama administration. Indeed, Equinix has explicit military collaborations: an internal reporting document makes a brief reference to a DC-area data center named DC97, located in a “SCIF,” or sensitive compartmented information facility. (Perhaps the best-known SCIF is the White House’s Situation Room.) Notably, this location makes DC97 exempt from the renewable energy reportage that Equinix publishes for its other data centers, including DC11. Under conditions of total secrecy, even energy becomes a security concern.

[30] Wendy Hui Kyong Chun, Updating to Remain the Same: Habitual New Media (Cambridge, MA: The MIT Press, 2016).

[31] Lisa Parks, Rethinking Media Coverage: Vertical Mediation and the War on Terror (New York: Routledge, 2018), 13–14.

[32] Peter Adey, “Security Atmospheres or the Crystallisation of Worlds,” Environment and Planning D: Society and Space 32, no. 5 (October 2014): 835.

Jeffrey Moro is a PhD candidate in English with a certificate in Digital Studies at the University of Maryland. He has written for Amodern and the Los Angeles Review of Books. Prior to joining UMD, he was Post-Baccalaureate Resident in Digital Humanities at Five Colleges, Inc. His website is https://jeffreymoro.com/.